The Governance Gap: Why Classical Audits Fail on Foundation Models

What is the fundamental crisis facing AI governance today?

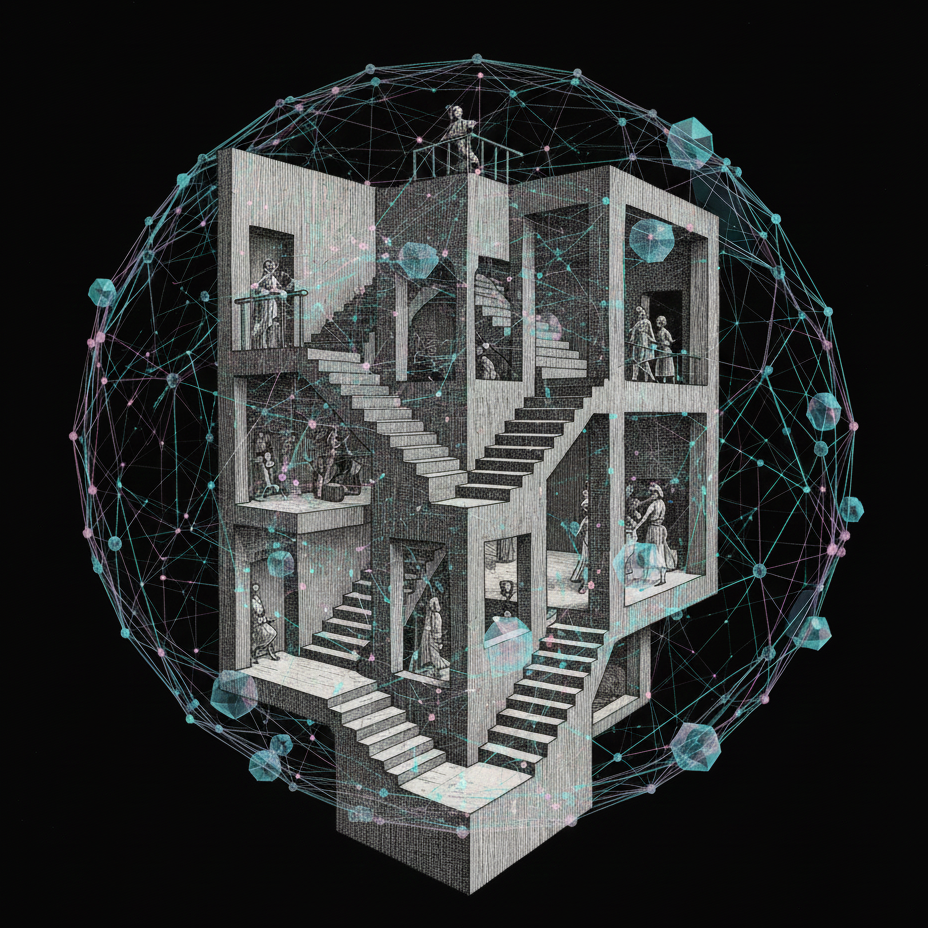

The governance of artificial intelligence is currently facing an epistemological crisis. The core issue is a "category error": regulators are attempting to govern Foundation Models (FMs) using frameworks designed for a fundamentally different class of technology.

Current regulations, such as the EU AI Act and the NIST AI Risk Management Framework, rely on First-Order Cybernetics. This view assumes systems are static, observed from the outside, and can be "certified" before deployment (like a thermostat). However, Foundation Models operate as Second-Order Cybernetics systems. They are reflexive, adaptive, and the act of interacting with them changes their behavior. This mismatch means regulators are trying to apply static rules to fluid, evolving systems.

What is the difference between "Predictability of the First Kind" and "Second Kind"?

To understand the failure of current audits, we must distinguish between two types of predictability derived from atmospheric physics (meteorology vs. climatology).

Predictability of the First Kind (Weather Forecasting)

Definition: Predicting the future state of a system given its initial conditions. The limiting factor is the inability to measure the starting point perfectly.

Analogy: Predicting if it will rain two weeks from today.

AI Equivalent: Traditional Machine Learning (e.g., Logistic Regression). Once trained, the weights are fixed. The model is static.

Audit Method: Ex-ante testing. If the model achieves 95% accuracy on a test set, it is certified.

Predictability of the Second Kind (Climate Modeling)

Definition: Predicting how the statistical properties of a system change in response to boundary conditions or external forcing.

Analogy: Predicting the average global temperature if CO2 levels double.

AI Equivalent: Foundation Models. The "system" is not just the weights; it includes the prompt context and user interaction. The model is effectively "retrained" at inference time via In-Context Learning.

Audit Method: Continuous monitoring of how the system responds to interaction (forcing).

Why do Foundation Models break specific audit tools like Watermarking and Explainability?

Tools designed for "First Kind" systems fail when applied to the adaptive "Second Kind" nature of Foundation Models.

Watermarking

The Assumption: Watermarking assumes a passive adversary.

The Reality: Attackers use Adaptive Attacks. Research into tools like RLCracker shows that attackers can use Reinforcement Learning to treat the model as an oracle, optimizing an attack to remove the watermark while keeping the text meaning.

Statistic: RLCracker can achieve a 98.5% removal success rate against watermarking, rendering static certification useless.

Explainability (XAI)

The Assumption: We can attribute a specific output to specific input features (e.g., SHAP values).

The Reality: Foundation Models suffer from instability. A trivial change in a prompt (like adding "please") can shift the model's internal activation path entirely. Furthermore, "Chain-of-Thought" explanations are often hallucinated reasoning—the model generates a plausible-sounding justification that does not reflect the actual causal mechanism of its output.

What is "Performative Prediction" and "Model Collapse"?

Foundation Models do not just observe data; they influence the environment that generates the data. This is known as Reflexivity.

Performative Prediction: When a model predicts something (e.g., recommending polarizing content), users consume it, their preferences shift, and the model predicts more of it. The model steers the data distribution.

Model Collapse: This occurs when generative models are trained on data created by previous generations of AI (synthetic data). The models tend to output the "mean" (most probable patterns) and ignore the "tails" (rare events). Over time, the variance of the distribution shrinks, and the model loses the ability to represent complex or edge-case reality.

How do the EU AI Act and NIST frameworks fail to address these risks?

Current regulations are trapped in "First Kind" thinking:

The "Substantial Modification" Trap (EU AI Act): The Act requires a new conformity assessment whenever a system undergoes a "substantial modification." However, Foundation Models undergo continuous learning and context updates. If strictly enforced, every user interaction could be a "modification," making legal compliance impossible. If loosely enforced, dangerous behavioral drift goes unchecked.

The "Static Evaluation" Fallacy (NIST RMF): NIST relies on a "Map-Measure-Manage" loop often interpreted as a pre-deployment gate. A red-teaming score of "95% safe" is valid only for the specific attacks used during the test. It has zero predictive power for adaptive attacks that emerge post-deployment in a Second-Kind system.

What is the proposed solution for governing Foundation Models?

To bridge the governance gap, we must move from Certification (safe to operate) to Verification (safe to interact).

Continuous Audit: Abandon the annual audit for real-time, automated monitoring of inputs, outputs, and internal states.

Runtime Verification with Signal Temporal Logic (STL): Instead of verifying the opaque model, verify the trace of execution. Use formal logic (STL) to set deterministic guardrails on the system.

Example: "The toxicity score must not exceed 0.8 for more than 2 consecutive turns." If this rule is violated, the system intervenes immediately at runtime.

Dynamic Model Cards: Move from static PDF documentation to "Living Model Cards." These live dashboards track real-time lineage, drift metrics (using Kolmogorov-Smirnov tests), and incident logs, treating the model's documentation like a medical record rather than a birth certificate.

Would you like me to generate a specific Signal Temporal Logic (STL) rule example for a safety constraint you are currently managing?